In this post, we demonstrate how to use Fly Machines to run Django Views as Serverless Functions. Django on Fly.io is pretty sweet! Check it out: you can be up and running on Fly.io in just minutes.

In my last post, we ran custom Django commands as serverless functions by defining a new process to run within a Machine. This article continues our series transforming our Django views into serverless functions by defining a service.

But first, let’s understand some concepts about Fly Machines.

Fly Machines processes

When running a custom Django command, we define the command to run as a separate process in processes within a Machine:

import requests

FLY_API_HOSTNAME = "https://api.machines.dev"

FLY_API_TOKEN = os.environ.get("FLY_API_TOKEN")

FLY_APP_NAME = os.environ.get("FLY_APP_NAME")

FLY_IMAGE_REF = os.environ.get("FLY_IMAGE_REF")

headers = {

"Authorization": f"Bearer {FLY_API_TOKEN}",

"Content-Type": "application/json",

}

def create_process_machine(cmd):

config = {

"config": {

"image": FLY_IMAGE_REF,

"auto_destroy": True,

"processes": [ # ← processes here!

{

"cmd": cmd.split(" ")

}

],

}

}

url = f"{FLY_API_HOSTNAME}/v1/apps/{FLY_APP_NAME}/machines"

response = requests.post(

url, headers=headers, json=config

)

if response.ok:

return response.json()

else:

response.raise_for_status()

The Django command (cmd) is passed as an argument to the entrypoint of our Docker container. In this case, the Machine will stop when the process exits without an error. When that happens, the Machine will destroy itself once it’s complete, as per "auto_destroy": True. This works well for Django commands, that can be run as its own process and exits when the command finishes.

On the other side, as mention before, when creating/destroying machines we should take the boot time into consideration. An interesting alternative is to reuse existing Machines by start/stop them accordingly. That’s what we use in this post: create a machine to offload some work, stop it when it’s idle and start it when a new request comes in. For that to happen automatically, we are setting up a service.

Fly Machines services

By default, Machines are closed to the public internet. To make them accessible, we need to allocate an IP address to the Fly App and add one or more services.

This happens automatically when we fly launch a Django app on Fly.io and the fly.toml config file is generated with:

# fly.toml

...

[http_service]

internal_port = 8000

force_https = true

auto_stop_machines = true

auto_start_machines = true

min_machines_running = 0

processes = ["app"]

[http_service] is a simplified version of [[services]] section for apps that only need HTTP and HTTPS services.

In services, we specify how to expose our application to the outside world which include setting for routing incoming requests to the appropriate components of our application.

To create a Machine with a service via Machines API, we would have something like this:

import os

import requests

FLY_API_HOSTNAME = "https://api.machines.dev"

FLY_API_TOKEN = os.environ.get("FLY_API_TOKEN")

FLY_APP_NAME = os.environ.get("FLY_APP_NAME")

FLY_IMAGE_REF = os.environ.get("FLY_IMAGE_REF")

headers = {

"Authorization": f"Bearer {FLY_API_TOKEN}",

"Content-Type": "application/json",

}

def create_service_machine():

config = {

"config": {

"image": FLY_IMAGE_REF,

"services": [ # ← services here!

{

"protocol": "tcp",

"internal_port": 8000,

# Fly Proxy stops Machines when

# this service goes idle

"autostop": True,

"ports": [

{

"handlers": ["tls", "http"],

# The internet-exposed port

# to receive traffic on

"port": 443,

},

{

"handlers": ["http"],

# The internet-exposed port

# to receive traffic on

"port": 80

},

],

}

],

}

}

url = (

f"{FLY_API_HOSTNAME}/v1/apps/{FLY_APP_NAME}/machines"

)

response = requests.post(

url, headers=headers, json=config

)

if response.ok:

return response.json()

else:

response.raise_for_status()

create_service_machine() creates a new machine with public access - we might refer to this machine as the serverless machine along this article.

That’s awesome but as I mentioned before, this machine is accessible to the public internet. We don’t want this machine processing any incoming requests to our main application, but rather specific views.

To accomplish that I need to introduce you to our fly-replay response header.

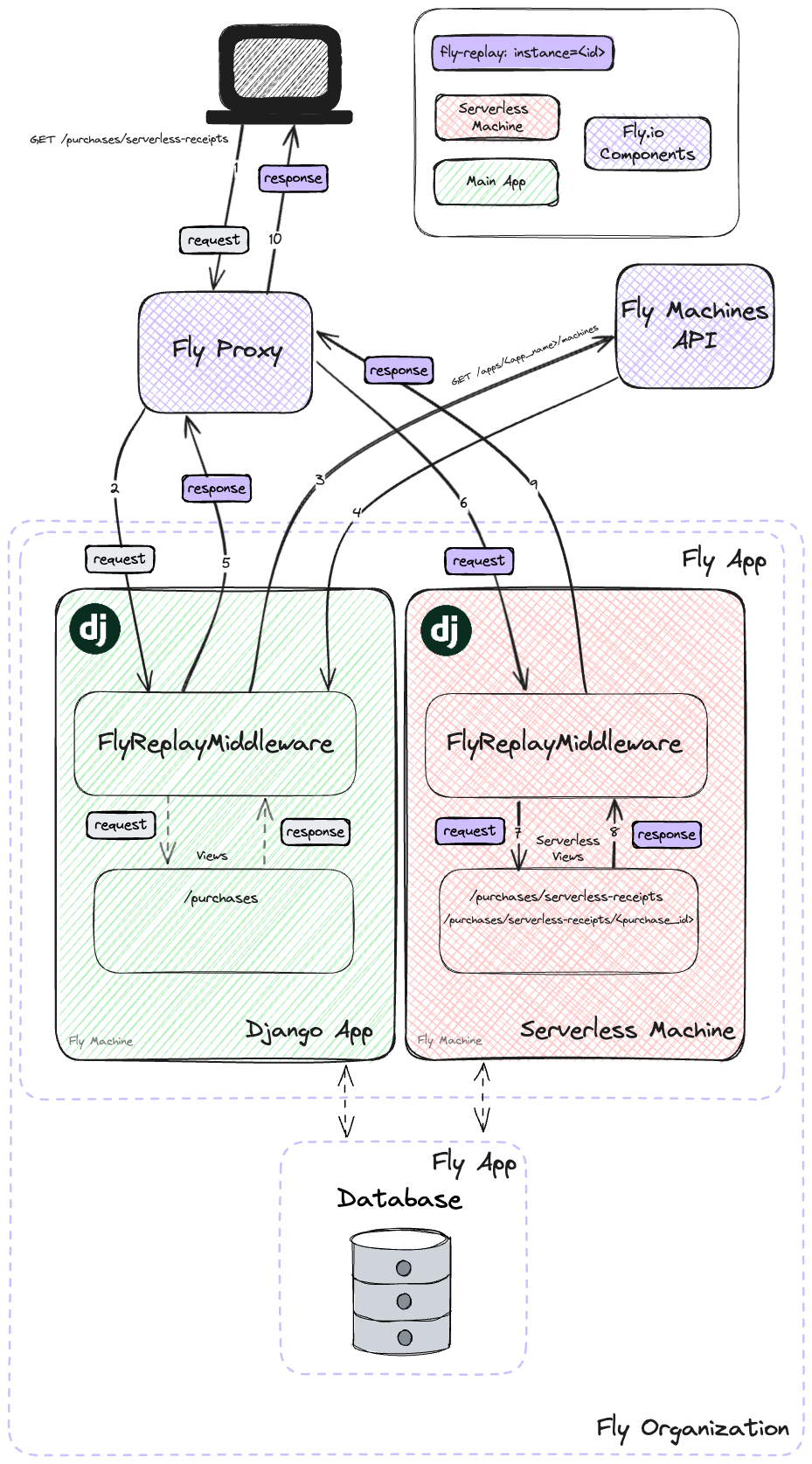

fly-replay 🔁

Fly Proxy sits between our application and the public internet and is responsible for routing requests to the appropriate Machines based on their location and availability - among many other interesting features!

One of its capabilities includes the ability to redeliver (replay) the original request somewhere else:

- in another region;

fly-replay: region=gru

- in another app in the same organization;

fly-replay: app=another-app

- in a specific machine;

fly-replay: instance=9020ee72fd7948

- elsewhere.

fly-replay: elsewhere=true

You can find more details in Playing Traffic Cop with Fly-Replay.

For our Django app, it means we can reroute the request to the serverless machine once we receive a request to a specific Django view.

Aha! Exactly what we’ve been looking for! 😎

Enough of concepts for now… Let’s check how we can implement that in Django.

Django Views as Serverless Functions ✨

For our demo, we simulate a store with purchases and products. A purchase has a list of product items:

We want to offload the receipt’s PDF generation to the serverless function.

These are the main steps we want to achieve:

- Client calls our endpoint

GET /purchases/serverless-receipts - Fly Proxy receives the

requestand sends to our Django App - Our Django app receives the

requestand it goes through the Middleware layer - Our custom Middleware

FlyReplayMiddlewarechecks if the request should be handled in the current Machine or should be replayed in another Machine by evaluating the path requested GET /purchases/serverless-receiptsis our serverless view. Fetch the serverless machine info and addfly-replay: instance=<id>to the response header, where<id>is the Machineidwhere we should run our serverless view- Fly Proxy receives a

responseand recognizes thefly-replayheader, so it redirects the originalrequestto another Machine, in this case, our serverless machine - The serverless machine receives the

request, processes it, and returns theresponseback to the Fly Proxy - Fly Proxy sends the

responseback to the client - Win! 🎖

This is the happy path that doesn’t take into consideration other possibilities:

- If the serverless machine doesn’t exist: we create a new one and get the info to be added to the

fly-replayresponse header. - If the serverless machine receives a non-serverless view request: the

FlyReplayMiddlewarewill be responsible to reroute the request back to our main application.

We will discuss those scenarios throughout this article and how to handle them.

Time to start coding! 👩🏽💻✨

views.py

Let’s start by defining our views:

# <app>/views.py

# store/views.py

from django.http import HttpResponse

from django.shortcuts import get_object_or_404

from store.helpers import generate_pdf # helper that generates the PDF

@require_GET

def machines_generate_receipts(request):

purchases = Purchase.objects.all()

buffer = generate_pdf(purchases) # generate the PDF

return HttpResponse(buffer, content_type="application/pdf")

@require_GET

def machines_generate_receipt(request, purchase_id):

purchase = get_object_or_404(Purchase, id=purchase_id)

buffer = generate_pdf([purchase]) # generate the PDF

return HttpResponse(buffer, content_type="application/pdf")

machines_generate_receipts() generates and renders a PDF file for all the purchases we currently have. machines_generate_receipt() generates and render a PDF file for a specific purchase.

urls.py

The urls are defined as follows:

# <app>/urls.py

# store/urls.py

from django.urls import path

from .views import machines_generate_receipts

app_name = "store"

urlpatterns = [

path(

"purchases/serverless-receipts/",

machines_generate_receipts,

name="machines_generate_receipts",

),

path(

"purchases/serverless-receipts/<int:purchase_id>/",

machines_generate_receipt,

name="machines_generate_receipt",

),

]

We aim to run machines_generate_receipts and machines_generate_receipt views as serverless functions.

settings.py

Now, let’s define in the settings.py the necessary environment variables to create new machines:

# <project>/settings.py

# fly/settings.py

import environ

env = environ.Env()

FLY_API_HOSTNAME = "https://api.machines.dev"

FLY_API_TOKEN = env("FLY_API_TOKEN")

FLY_IMAGE_REF = env("FLY_IMAGE_REF")

FLY_APP_NAME = env("FLY_APP_NAME")

FLY_API_HOSTNAME: the recommended way to connect with Machines API is to use the public API https://api.machines.devFLY_API_TOKEN: it’s possible to create a new auth token in your Fly.io dashboard. For local development, you can simply access the token used byflyctlby using:

fly secrets set FLY_API_TOKEN=$(fly auth token)

FLY_IMAGE_REFandFLY_APP_NAME: those are environment variables available to us when our app is deployed on Fly.io.

We also specify the list of views to run as serverless functions:

# <project>/settings.py

# fly/settings.py

FLY_MACHINES_SERVERLESS_FUNCTIONS = [

# ("<app>:<path_name>", (<methods>))

("store:machines_generate_receipts", ("GET",)),

("store:machines_generate_receipt", ("GET",)),

]

Now, let’s work with Fly Machines API.

Fly Machines

To keep all the logic around Machines in one place, let’s define a machines.py:

# <app>/machines.py

# store/machines.py

import requests

from django.conf import settings

from django.urls import resolve, Resolver404

FLY_API_HOSTNAME = settings.FLY_API_HOSTNAME

FLY_API_TOKEN = settings.FLY_API_TOKEN

FLY_APP_NAME = settings.FLY_APP_NAME

FLY_IMAGE_REF = settings.FLY_IMAGE_REF

headers = {

"Authorization": f"Bearer {FLY_API_TOKEN}",

"Content-Type": "application/json",

}

def machines_url():

return f"{FLY_API_HOSTNAME}/v1/apps/{FLY_APP_NAME}/machines"

def create_serverless_machine():

config = {

"config": {

"image": FLY_IMAGE_REF,

"env": {"IS_FLY_MACHINE_SERVERLESS": "true"},

"services": [

{

"protocol": "tcp",

"internal_port": 8000,

"autostop": True,

"ports": [

{

"handlers": ["tls", "http"],

"port": 443,

},

{

"handlers": ["http"],

"port": 80

},

],

}

],

}

}

response = requests.post(

machines_url(), headers=headers, json=config

)

if response.ok:

return response.json()

else:

response.raise_for_status()

def get_or_create_serverless_machine():

response = requests.get(

machines_url(),

headers=headers,

params={"include_deleted": False},

)

if response.ok:

machines = response.json()

for machine in machines:

if (

machine["config"]["env"].get(

"IS_FLY_MACHINE_SERVERLESS", "false"

)

== "true"

):

return machine

else:

response.raise_for_status()

return create_serverless_machine()

def is_serverless_function_request(request):

for pattern, methods in getattr(

settings, "FLY_MACHINES_SERVERLESS_FUNCTIONS", []

):

request_method = request.method

try:

resolved_match = resolve(request.path)

url_name = resolved_match.url_name

app_name = resolved_match.app_name

if (

f"{app_name}:{url_name}" == pattern

and request_method in methods

):

return True

except Resolver404:

continue

return False

That was a lot of code. Shall we break this down?

Let’s start from the top:

def create_serverless_machine():

config = {

"config": {

"image": FLY_IMAGE_REF,

"env": {"IS_FLY_MACHINE_SERVERLESS": "true"},

"services": [

{

"protocol": "tcp",

"internal_port": 8000,

"autostop": True,

"ports": [

{

"handlers": ["tls", "http"],

"port": 443,

},

{

"handlers": ["http"],

"port": 80

},

],

}

],

}

}

response = requests.post(

machines_url(), headers=headers, json=config

)

if response.ok:

return response.json()

else:

response.raise_for_status()

create_serverless_machine() creates a new machine using the current Docker image of our Django app (FLY_IMAGE_REF). It spins up a new machine identical to our main app. The configurations worth highlighting are:

"config": {

"env": {

"IS_FLY_MACHINE_SERVERLESS": "true"

}

...

}

- in

config.env: the key/value pair to be set as an environment variable.

We’ll use IS_FLY_MACHINE_SERVERLESS environment variable to filter the machine in which the serverless view should run on. Note: any key/value pair should be set as <string>:<string>, e.g. "IS_FLY_MACHINE_SERVERLESS": "true".

"config": {

...

"services": [{

...

"autostop": True,

}]

}

- in

config.services: settingautostoptotrue

autostop is false by default. When true, the Fly Proxy automatically stops the machine when idle, so you don’t pay for the machine when there is no traffic. That’s the catch!

def get_or_create_serverless_machine():

response = requests.get(

machines_url(),

headers=headers,

params={"include_deleted": False},

)

if response.ok:

machines = response.json()

for machine in machines:

if (

machine["config"]["env"].get(

"IS_FLY_MACHINE_SERVERLESS", "false"

)

== "true"

):

return machine

else:

response.raise_for_status()

return create_serverless_machine()

get_or_create_serverless_machine(), as the name suggests, gets the serverless machine or creates a new one. This is done by fetching all non-deleted machines and checking the IS_FLY_MACHINE_SERVERLESS environment variable we defined in create_serverless_machine().

def is_serverless_function_request(request):

for pattern, methods in getattr(

settings, "FLY_MACHINES_SERVERLESS_FUNCTIONS", []

):

request_method = request.method

try:

resolved_match = resolve(request.path)

app_name = resolved_match.app_name

url_name = resolved_match.url_name

if (

f"{app_name}:{url_name}" == pattern

and request_method in methods

):

return True

except Resolver404:

continue

return False

Lastly, is_serverless_function_request() checks FLY_MACHINES_SERVERLESS_FUNCTIONS list from our settings.py and returns weather the current request.path should be handled by our serverless machine.

Now, when a request to a serverless view comes in to our main app, what do we do? And when a request to a non-serverless view comes in to our serverless machine, what should we do?

There’s still a piece of the puzzle missing. 🧩

FlyReplayMiddleware

To wrap up, let’s build the last piece: our custom Middleware.

The Django Middleware operates globally. It acts as an intermediary between the request from the user and the response from the server.

We need a way to intercept the request when it comes in to decide if we should reroute it to another machine.

First, let’s create a middleware.py to define our custom middleware:

# <app>/middleware.py

# store/middleware.py

import os

import logging

import requests

from django.conf import settings

from django.http import HttpResponse

from .machines import (

get_or_create_serverless_machine,

is_serverless_function_request,

)

FLY_APP_NAME = settings.FLY_APP_NAME

class FlyReplayMiddleware:

def __init__(self, get_response):

self.get_response = get_response

def __call__(self, request):

if is_serverless_function_request(request):

if not os.environ.get("IS_FLY_MACHINE_SERVERLESS"):

try:

response = HttpResponse()

serverless_machine = (

get_or_create_serverless_machine()

)

response[

"fly-replay"

] = f"instance={serverless_machine['id']}"

return response

except requests.exceptions.HTTPError as err:

return HttpResponse(

"Something went wrong", status=404

)

elif (

os.environ.get("IS_FLY_MACHINE_SERVERLESS") == "true"

):

response = HttpResponse()

response["fly-replay"] = f"elsewhere=true"

return response

response = self.get_response(request)

return response

and add it to settings.py:

# <project>/settings.py

# fly/settings.py

MIDDLEWARE = [

"django.middleware.security.SecurityMiddleware",

"django.contrib.sessions.middleware.SessionMiddleware",

"django.middleware.common.CommonMiddleware",

"django.middleware.csrf.CsrfViewMiddleware",

"django.contrib.auth.middleware.AuthenticationMiddleware",

"django.contrib.messages.middleware.MessageMiddleware",

"django.middleware.clickjacking.XFrameOptionsMiddleware",

"store.middleware.FlyReplayMiddleware", # ← Added!

]

As a reminder, Django applies the middleware in the order it’s defined in the list, top-down.

Now, let’s understand what our custom middleware is doing.

If our main app receives a request to a serverless view, it gets the serverless machine id and adds the fly-replay: instance=<id> to the response header to be replayed on that specific machine. The serverless machine is found based on the existence of IS_FLY_MACHINE_SERVERLESS environment variable.

If our Serverless Machine receives a request to a non-serverless view, it adds the fly-replay: elsewhere=true to the response header to be replayed anywhere else other than the serverless machine.

Otherwise, we just call the view and return the response as per usual.

Here is the table to better visualize the scenarios:

| Views | Main App | Serverless Machine |

|---|---|---|

| Serverless | add fly-replay: instance=<id>to response header |

call the view and return a response |

| Non-Serverless | call view and return a response | add fly-replay: elsewhere=trueto the response header |

That’s it! You made it to the end! 🎉

Try it out 🚀

Last but not least, let’s try this demo out here:

Note: Receipts are generated using reportlab (reportlab==4.0.7).

Final Thoughts

Machines API offers a way to create services that can seamlessly scale down to zero during periods of inactivity.

However, it’s crucial to emphasize that while working directly with the Machines API provides us with greater control over our Machines, it also involves assuming some of the orchestration responsibility.

This post covers creating a serverless Machine on the spot and using it to invoke specific Django views. Note that topics such as deployment, latency and replacing the serverless machine with new versions are not covered in the discussion. Let’s save it for a future blog post, shall we?

Our demo also demonstrates some important and interesting concepts about Fly Machines, in particular, processes, services, Fly Proxy and fly-replay.

Such an approach can also be applied to other use cases previously discussed in this blog. If Django Async Views fall short in preventing the blocking of your main application, and a task queue such as Celery seems like overkill for your not-so-often but resource-intensive or I/O-bound operations, you might want to explore Fly Machines as an alternative.

Django really flies on Fly.io

You already know Django makes it easier to build better apps. Well now Fly.io makes it easier to deploy those apps and move them closer to your users making it faster for them too!

Deploy a Django app today! →